The advent of large language models (LLMs) has revolutionized the field of artificial intelligence, showcasing astounding capabilities in natural language understanding and generation. With hundreds of billions of parameters acting as adjustable levers, these models gain the ability to discern complex patterns within vast swathes of data. Organizations like OpenAI, Meta, and Google have invested enormous resources into building these behemoths, bolstering their accuracy and power. However, the promising performance of LLMs is shadowed by significant drawbacks. Training these immense systems requires colossal computational resources, translating into extravagant financial costs. For instance, Google’s investment in training its Gemini 1.0 Ultra model hit an astonishing $191 million—a staggering amount for a single AI model.

It’s not just the initial financial outlay that raises eyebrows. The energy consumption associated with deploying these models is equally alarming. A mere interaction with systems such as ChatGPT can consume around ten times the energy of a standard Google search, underscoring the severe ecological impact of scaling up AI technologies. As awareness of energy consumption grows, a pivot toward sustainable AI becomes necessary.

Searching for Solutions: The Small Language Model Innovation

In response to the growing concerns surrounding LLMs, the AI research community is increasingly exploring small language models (SLMs), which operate with merely a few billion parameters. While these models may not be designed for broad applications like their larger counterparts, they excel in terms of efficiency and cost-effectiveness, particularly for narrowly defined tasks. For example, SLMs can adeptly handle conversation summaries, patient inquiries in health care settings, and data acquisition in smart devices.

Prominent figures in the field, including Zico Kolter from Carnegie Mellon University, assert that for many specific tasks, an 8 billion-parameter model can deliver impressive performance. This newfound accessibility allows small models to run seamlessly on everyday devices, such as laptops and smartphones, bypassing the resource-heavy data centers usually required for LLM deployment.

Researchers have been developing novel methods to enhance the efficacy of these smaller models, enabling them to harness the strengths of their larger counterparts while minimizing overhead. One such approach is knowledge distillation, where a large model transfers its learning to a smaller one, akin to a teacher providing guidance to a student. This process utilizes high-quality data generated from the larger model’s outputs, streamlining the training process while reducing reliance on messy, unprocessed internet data.

Innovative Training Techniques and Their Benefits

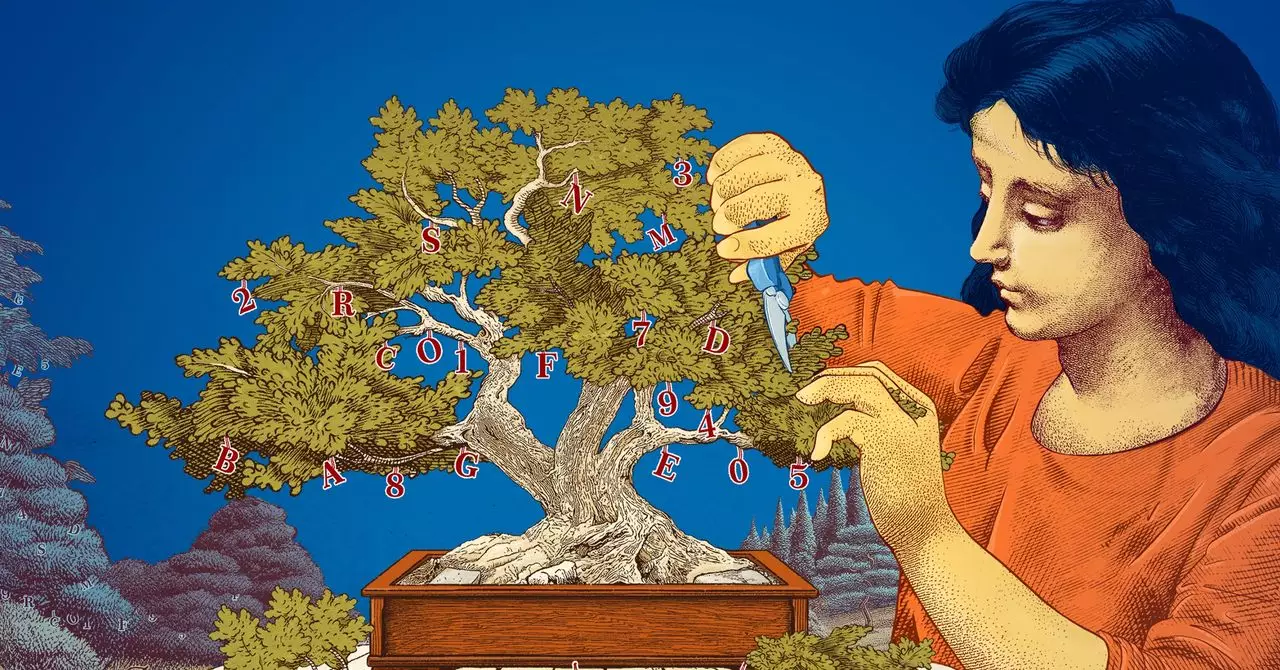

The pursuit of smaller and more efficient models has sparked creative training methodologies among researchers. For instance, techniques like pruning allow for the intelligent removal of redundant components within a neural network, enhancing the model’s efficiency without sacrificing performance. This concept, which draws parallels from the human brain’s natural optimization through synapse pruning, traces back to seminal research by Yann LeCun in 1989.

LeCun’s work proposed that as much as 90% of a neural network’s parameters could be eliminated without significantly impacting its functionality. Such methods fine-tune smaller language models for specific tasks, making them not only effective but also more interpretable. The relative simplicity of SLMs offers researchers a less risky avenue to experiment and innovate, leading to rapid advancements in understanding the mechanics of language models.

As Leshem Choshen from the MIT-IBM Watson AI Lab points out, the ability to test new ideas with small models allows researchers to explore potential breakthroughs without the burdens of high stakes. Unlike their larger counterparts, smaller models can be manipulated and adjusted readily, providing valuable insights into AI behavior and capabilities.

The Balanced Future of Language Models

While it’s undeniable that large models will continue to play a critical role in the broader applications of AI—such as generalized chatbots, creative image generation, and multifaceted drug discovery—there lies a significant opportunity for small, targeted models. These simpler frameworks promise to democratize access to sophisticated AI capabilities, cutting down costs and computational demands along the way.

As the world moves toward a more sustainable future, the efficiency of small language models serves as a beacon of hope. By harnessing their strengths while leveraging improvements in training methodologies, we can reshape our approach to artificial intelligence, making it more accessible, responsible, and innovative. As researchers unveil the profound potential of small models, it’s clear that we are entering a transformative phase in the landscape of AI development—one defined not by size but by precision, agility, and purpose.