Google’s recent announcement of its Gemini Embedding model reaching general availability marks a significant milestone in the realm of artificial intelligence. For years, embeddings have underpinned advancements in natural language processing, multimodal data integration, and intelligent information retrieval. By ranking number one on the prestigious Massive Text Embedding Benchmark (MTEB), Gemini-embedding-001 not only elevates Google’s stature but also reshapes the competitive landscape. This success underscores a central truth: in the rapidly evolving AI ecosystem, the quality of embedding models directly correlates with the potential of innovative applications like semantic search, personalized recommendations, and retrieval-augmented generation (RAG).

However, heralded as a groundbreaking model, Gemini isn’t without challengers. The AI community is witnessing heightened competition from open-source projects, which are rapidly closing the gap with proprietary giants. Enterprises are now faced with a strategic dilemma—should they bet on Google’s high-performing, yet closed, API solution, or pivot toward open-source models that offer customization, transparency, and control? This pivotal choice will influence how organizations leverage AI for competitive advantage in a data-driven world.

Embedded Intelligence: Transforming Data into Actionable Insights

At its core, embeddings perform a fundamental function: transforming complex data—be it text, images, videos, or audio—into numerical vectors that capture the essence of the content. When trained deeply and effectively, these vectors allow machines to understand the semantic relationships among data points, often far surpassing simple keyword matches. Instead, embeddings enable more nuanced searches, better content classification, and improved contextual understanding, which are essential for building intelligent systems.

The practical applications of embeddings extend across diverse industries. For instance, e-commerce platforms employ multimodal embeddings to generate unified representations of products that incorporate both visual and textual details, enhancing the accuracy of search results and recommendation engines. In finance or legal domains, embeddings contribute to faster, more precise document clustering and sentiment analysis, enabling firms to make data-informed decisions rapidly. Equally important is their role in AI agents—allowing intelligent retrieval of relevant information amidst vast and varied datasets.

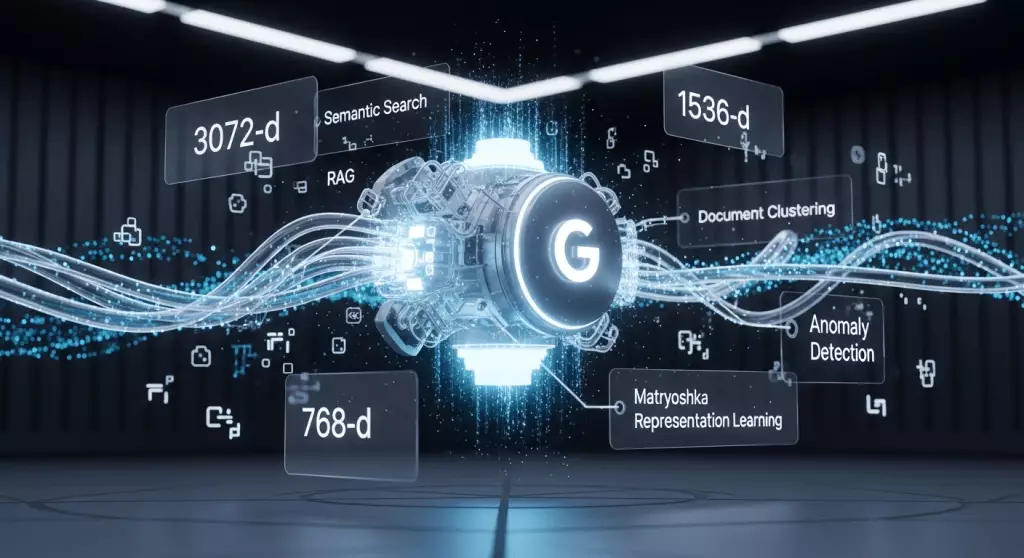

What makes Gemini stand out—and potentially superior—is its remarkable versatility. Google’s innovation with Matryoshka Representation Learning (MRL) provides a dynamic range of embedding dimensions, from 3072 down to 768, without sacrificing core features. This adaptability is a game-changer, empowering enterprises to balance accuracy and computation costs efficiently. Such flexibility means organizations can tailor models to their specific environment, scaling solutions up or down depending on application demands, without becoming beholden to one-size-fits-all architectures.

Competitive Dynamics: Proprietary vs. Open-Source Paradigms

Despite Google’s leading position, the AI landscape is undeniably competitive and fragmenting. Open-source models like Alibaba’s Qwen3-Embedding have emerged as formidable contenders, particularly due to their permissive licensing under Apache 2.0, which facilitates commercial deployment without restrictive licensing fees. These models often attract organizations seeking greater transparency, customization capabilities, and control over their data pipelines.

Furthermore, niche models such as Mistral’s code-focused embeddings and Qodo’s specialized embeddings for code repositories are sharpening the fight, tailored specifically for domain-specific needs. These task-specific models can outpace general-purpose embeddings in niche applications, such as code retrieval or specialized document analysis, rendering the “one-size-fits-all” approach less attractive for certain verticals.

The strategic choice between adopting Google’s off-the-shelf Gemini model and exploring open-source alternatives hinges on multiple factors. Enterprises deeply integrated into Google Cloud ecosystems may find significant advantages in seamless integration, simplified operational workflows, and the reassurance of working with a top-ranked, well-supported technology. Conversely, organizations prioritizing data sovereignty, cost containment, or bespoke customization might prefer open-source models—leveraging them on private infrastructure or tailoring them to unique domain requirements.

This friction spotlights a larger evolution: AI infrastructure is transitioning from monolithic, proprietary solutions to a more democratized, community-driven landscape. As open-source options reach parity with commercial offerings in benchmarks like MTEB, the true differentiator for organizations becomes strategic—how they deploy, control, and innovate with these models.

Implications for the Future: Control, Security, and Innovation

Deciding between proprietary and open-source models is more than just a technical matter—it’s a philosophical stance on data control, security, and innovation agility. Google’s Gemini, with its advanced performance and ease of use through an API, offers a compelling value proposition for businesses seeking rapid deployment and minimal hassle. But the trade-off is reliance on a closed environment, potential concerns over data privacy, and limited customization.

Open-source models like Qwen3-Embedding, however, empower organizations to build bespoke solutions tailored to their security protocols and operational constraints. They also facilitate iterative improvements, enabling enterprises to modify and extend models to suit evolving needs—a feature crucial for maintaining technological sovereignty in sensitive sectors such as healthcare, finance, or government.

Additionally, as AI applications become embedded into critical decision-making systems, questions around transparency, ethics, and control will intensify. Proprietary models, while powerful, often obscure their inner workings, raising concerns about explainability. Open-source alternatives foster transparency and foster community-driven safety checks, which could accelerate responsible AI development.

Overall, the advent of Gemini Embedding signifies a shift toward higher-performance, more versatile models that democratize AI capabilities. Yet, the ongoing tension between open control and proprietary convenience will carve distinct paths for organizations. The winners will be those who recognize that in AI, power is not solely derived from cutting-edge models but from strategic, nuanced choices about how, where, and why to incorporate these tools into their digital arsenals.