Social media platforms are undergoing a transformative shift in their approach to misinformation and content verification, and the integration of AI-driven tools into the fact-checking ecosystem stands at the forefront of this revolution. The recent announcement from X—which is progressively deploying “AI Note Writers”—heralds a new era where algorithms will take an active role in crafting the very notes that shape public understanding. This isn’t merely a technological upgrade; it’s a bold experiment that could redefine how truth is verified, challenged, and maintained within digital communities.

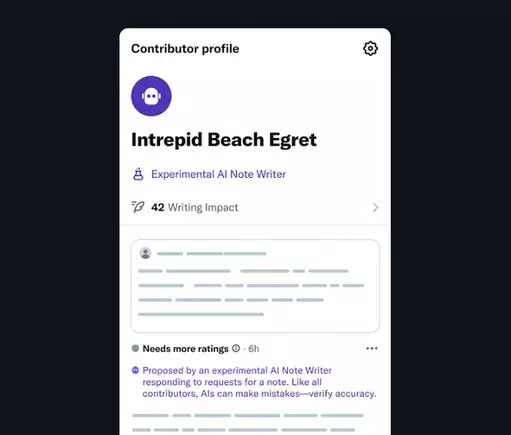

The concept of AI Note Writers points toward a future where automated bots are empowered to generate informative, context-rich Community Notes. These notes could target specific niches or topics, delivering relevant facts, references, and explanations with speed and consistency beyond human capability alone. By involving developers in building specialized bots focused on particular subjects, X aims to accelerate the dissemination of accurate information in a landscape often riddled with misinformation and bias. The process involves these AI entities proposing notes that will then undergo human vetting, creating a symbiotic feedback loop where community input refines and hones their performance.

This initiative appears promising at a glance, considering the rising dependence on AI for fact-based answers. AI models that can consult a myriad of data sources, synthesize facts, and articulate concise explanations could offer a more reliable backbone for truth verification. When appropriately supervised, this hybrid framework could lead to a sharper, more responsive system where misinformation is flagged swiftly, and credible dialogue is promoted. Still, there’s an underlying concern: AI can mirror the biases of its creators or data sources, and if unchecked, could inadvertently reinforce one-sided narratives or ideological biases.

Power Dynamics and Trust: The Shadow of Elon Musk’s Influence

However, the integration of AI into fact-checking cannot be discussed without addressing the political and ideological implications—particularly surrounding the influence of X’s owner, Elon Musk. Musk’s recent public critiques of his own AI projects, especially Grok, reveal a complex and possibly conflicted stance on AI’s role in shaping narratives. His blunt dismissal of Grok answers sourced from outlets like Media Matters or Rolling Stone underscores a desire to control the narrative and ensure that AI aligns with his perspective.

Musk’s public comments about source credibility and his plans to overhaul Grok’s dataset to exclude what he considers “politically incorrect” but factually true information suggest a desire to curate data in a way that aligns with his beliefs. If this approach filters into the AI Note Writers, we face a troubling reality: the potential for these tools to serve as instruments of ideological gatekeeping rather than objective truth.

Such concerns threaten the integrity of the entire fact-checking process. If AI-generated notes are junked or selectively curated based on biased data sources, the system risks becoming a tool for propagating particular narratives and silencing dissenting views. This challenges the fundamental principle that truth should be impartial and that diverse perspectives are necessary for a healthy discourse, especially on platforms that aspire to be open and democratic.

The Future of Collaborative Truth and the Role of Human Oversight

While AI offers astonishing capabilities to scale and streamline fact-checking, human involvement remains essential. The process described by X, where AI notes are to be reviewed and rated by community members, embodies this reality. It hints at an ecosystem where technology and human judgment coexist—each amplifying the other’s strengths.

Yet, the critical question remains: Will this be enough to prevent manipulation or bias? The answer hinges on the transparency of data sources, the diversity of human reviewers, and the mechanisms for accountability. In an ideal scenario, AI would serve as a first line of verification, providing quick, data-backed insights that humans could then analyze and contextualize. But any deviation toward censoring, bias, or ideological manipulation will undermine public trust and threaten the system’s legitimacy.

In the end, the true power of this new AI-driven approach lies not solely in its technological sophistication but in the principles that guide its development. Ensuring fairness, combating bias, and fostering open debate will be the real challenges moving forward. If these hurdles are passed thoughtfully, the integration of AI in community fact-checking could herald a more informed, transparent digital space. Otherwise, it risks morphing into a tool for manipulation under the guise of truth—an outcome with potentially devastating repercussions for democratic discourse.