Artificial intelligence has rapidly transitioned from a specialized tool to a ubiquitous component of our digital landscape. While many developers and companies tout their AI products as safe and regulated, a closer examination of these claims reveals a troubling reality: safeguards are often superficial or outright absent. The case of Grok Imagine exemplifies this dangerous trend. Despite marketing itself as an innovator in AI-generated media, it fails spectacularly in implementing basic protections against harmful content. The presence of a “spicy” mode that produces nude or suggestively explicit content with minimal oversight exposes the folly of relying on superficial safeguards in a rapidly evolving technological space. The assumption that users will adhere to ethical boundaries or that AI systems can self-regulate is fundamentally flawed. Instead, these tools enable the worst impulses of individuals with minimal friction, creating an environment rife with potential for harm.

The Ethical Vacuum Fueled by AI’s Inconsistent Policies

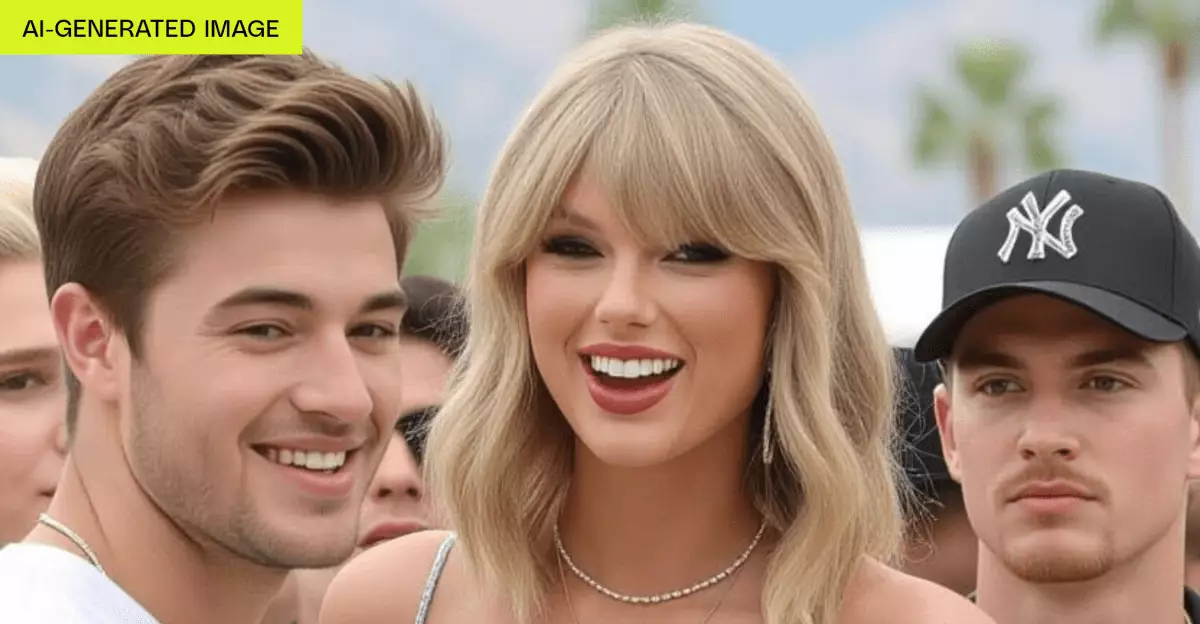

One of the more egregious aspects of the Grok Imagine platform is its blatant disregard for responsible content policies. While the company’s official stance appears to ban explicit and exploitative imagery, the operational reality starkly contrasts this. The AI’s ability to generate realistic images and videos of celebrities in compromising situations — and to do so with ease — underscores a dangerous disconnect between policy and practice. The system’s “spicy” mode, marketed as an entertainment feature, inherently encourages the creation of NSFW, suggestive, or even harmful deepfakes. That it can produce content resembling minors or non-consensual imagery without adequate safeguards is a stark reminder that technology often outpaces regulation. The superficial implementation of age checks and the absence of verified identity measures further amplify this vulnerability. Such gaps not only enable abuse but also create fertile ground for malicious activities, ranging from harassment to blackmail.

The Illusion of Control in a Chaotic Landscape

The core issue with AI-generated content is the illusion that these powerful tools can be safely contained. Companies like Grok Imagine craft an alluring narrative of innovation, yet their actual practices often reflect negligence or indifference. By providing easy-to-access features that generate deepfake images and videos with minimal oversight, they foster an environment where consent and privacy are easily disregarded. The technology’s uncanny valley imperfections do little to prevent misuse; instead, they serve as a reminder that AI can manipulate perception without adequate safeguards. Moreover, the lax approach to age verification means that minors—who are especially vulnerable—are at risk of exposure to harmful content. Ultimately, the gap between stated policies and real-world application demonstrates a fundamental flaw: many AI companies are more committed to profit and novelty than to ethical responsibility.

The Broader Implications for Society and Regulation

This troubling trend extends beyond individual platforms and reflects broader societal and regulatory challenges. Governments and regulatory bodies have been slow to catch up with the rapid development of generative AI tools. Existing laws, like the Take It Down Act, attempt to address deepfake content, but enforcement remains weak and inconsistent. Companies that skirt these regulations or actively bypass safeguards threaten to undermine public trust in AI technology altogether. Without stringent accountability measures, the proliferation of unregulated AI content risks normalizing harmful behaviors, encouraging harassment, and eroding the boundary between reality and fiction. As AI becomes more accessible, this unregulated landscape may give rise to a new era of digital disinformation, privacy violations, and exploitation. Recognizing and rectifying these deficiencies should be a priority, yet the current approach suggests that many developers prefer to push boundaries for quick profits rather than develop truly responsible systems.

Reflections on Responsibility and the Future of AI

The debate around AI safeguards is not merely about regulatory compliance; it is a moral imperative. The superficial measures we see today are insufficient to prevent the exploitation of vulnerable individuals, the spread of misinformation, or the erosion of digital trust. Companies like Grok Imagine exemplify a troubling willingness to prioritize user engagement and revenue over ethical considerations. This reckless approach not only endangers individuals but also casts a shadow over future AI innovations. If the industry continues to downplay the importance of regulation and oversight, the consequences could be severe, including increased societal polarization, victimization, and a loss of faith in technology. It is imperative that developers, regulators, and stakeholders collaborate to establish real, enforceable safeguards that prioritize human dignity and privacy over mere profit motives. Only through deliberate and sustained efforts can we hope to harness AI’s potential responsibly, ensuring it serves society rather than weaponizing it.

Note: This perspective advocates for stricter regulation and ethical oversight, emphasizing the urgency of proactive measures to mitigate harm. AI’s transformative power must be matched with a commensurate sense of responsibility to prevent it from becoming a tool of exploitation rather than enlightenment.