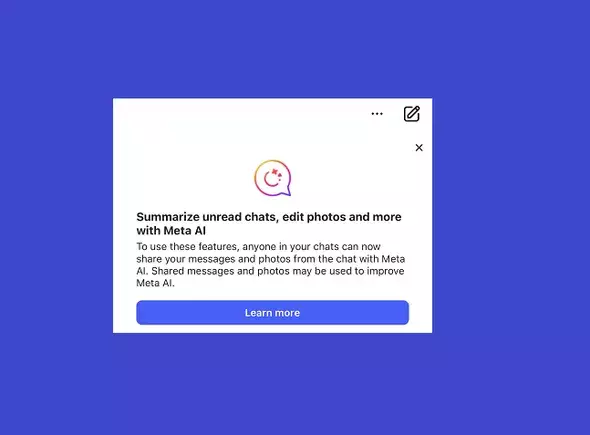

In recent days, users of Meta platforms like Facebook, Instagram, Messenger, and WhatsApp have been greeted with notifications regarding the company’s latest feature: the integration of AI tools into their private messaging systems. While the prospect of using AI to respond to queries within direct chats might seem appealing, the implications for user privacy and data security warrant careful examination. As Meta rolls out this feature, it raises critical questions about the extent to which users understand and control the information they share in these environments.

As the technology landscape evolves, Meta aims to position itself as a pioneering force within the AI sphere. By allowing users to engage with its AI functionalities directly within messaging chats, the company is effectively digitizing conversational interactions in a way that is unprecedented for private messaging. However, along with these innovations comes a stark reminder of the potential vulnerabilities inherent in utilizing such features. Users are now informed that their messages, images, and shared content might be utilized in training the AI model, particularly if other participants within the chat choose to engage the AI.

The notification prompts users to be cautious about sharing sensitive information—such as personal identification numbers, financial details, and private conversations—within chats where the AI might have access. The suggestion to remain mindful seems rather hollow in a world where many users may not truly grasp the full consequences of blending artificial intelligence with personal discussions.

One of the more alarming aspects of this updated functionality is the enduring nature of user consent. Most individuals, when signing up for Meta services, diligently scroll through extensive permissions and terms without fully comprehending their ramifications. In this case, it appears users have unwittingly consented to AI’s involvement in their conversations, raising the question: can anything meaningful and personal ever exist in a space managed by corporate entities with overarching data mining capabilities?

The reality is stark: users can neither designate specific messages as “off-limits” for AI analysis nor revoke permissions for how their shared content is treated. Users who are concerned about their data privacy face limited options: they can refrain from interacting with AI during chats, delete relevant content, or abandon the platform altogether. While these steps seem simple, they reflect a larger issue—a lack of user-centric design in how privacy is managed online.

Despite the problematic implications related to privacy, the integration of AI does offer some conveniences. Instant access to responses or assistance within ongoing conversations can enhance user experience, particularly for individuals who seek to streamline their interactions online. Yet this convenience comes at a cost; the risk of compromising personal conversations may deter many from fully leveraging the feature.

Furthermore, the practical value of such AI integration can be called into question. If users need to be hyper-vigilant about not inadvertently sharing sensitive information, is the feature beneficial enough to warrant its use? In many cases, a separate conversation with a Meta AI could serve the purpose without the additional privacy concerns. This redundancy suggests that the feature’s actual utility is questionable.

In an age where the boundaries of privacy are continually tested, the introduction of AI into messaging apps adds layers of complexity to how individuals communicate. Users are left navigating an uncertain terrain where the implications of a seemingly benign feature may extend far beyond initial expectations. While Meta is transparent about its data practices by posting disclaimers, true user understanding may lag behind actual implementation.

Ultimately, whether Meta’s AI in chats constitutes an overreach may depend on individual user perspectives. For some, the integration could enhance their social media experience, while for others, it may feel invasive and alarming. Thus, it is imperative that both Meta and its users engage in ongoing dialogue surrounding data privacy, leading to greater awareness and more robust protections as AI technology continues to evolve. In the end, users must make informed, conscious choices about how they interact with these platforms, weighing the allure of convenience against the fundamental right to privacy.