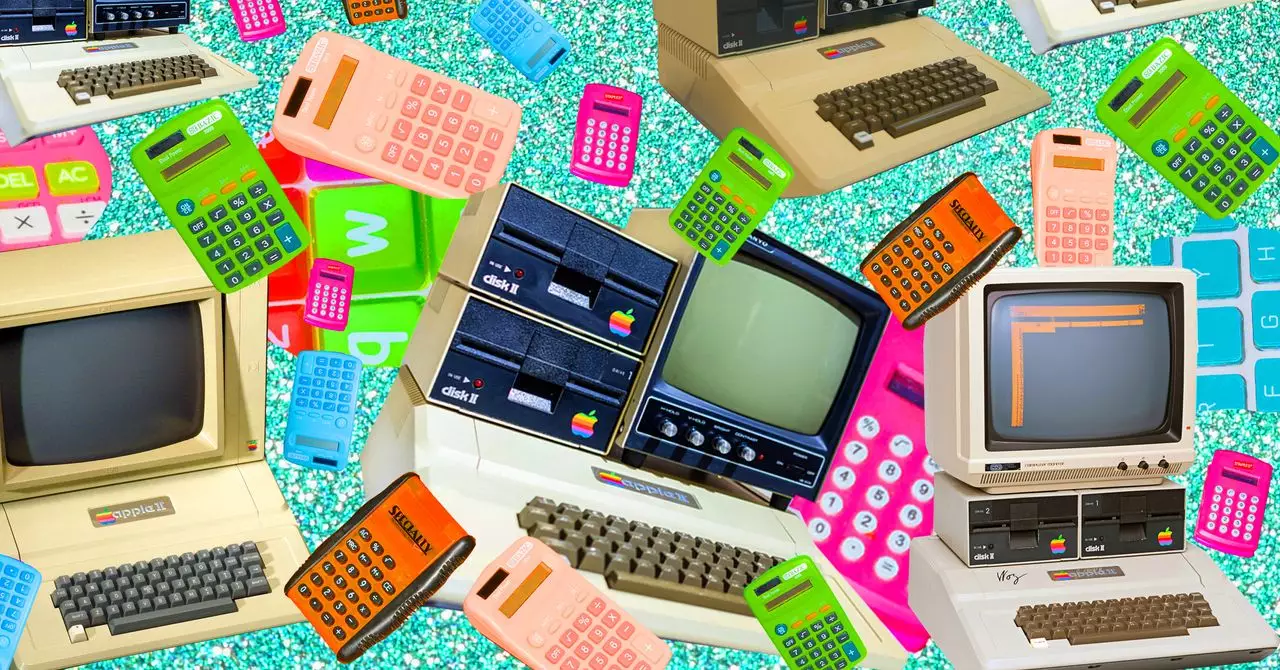

The infusion of technology into education has historically been driven by both strategic corporate initiatives and societal needs. Pioneer companies like Apple played a pivotal role in democratizing access to computers within school environments. By leveraging legislative advocacy—such as lobbying for tax breaks on donated technology—and engaging in direct contributions, Apple set a precedent that transformed classrooms. Their philanthropic efforts, exemplified by the donation of nearly 10,000 Apple IIe computers to California schools in the early 1980s, not only provided immediate access to new tools but also signaled a shift towards embracing digital literacy. Over the decades, this initial wave grew exponentially; from a staggering 1:92 computer-to-student ratio in the US in 1984, to a much more balanced 1:4 ratio in 2008, and eventually to the norm of one device per student by 2021. These investments, driven increasingly by educational policy and technological innovation, have aimed to bridge educational gaps and prepare students for a digital economy. Yet, despite these advances, a critical perspective urges us to question whether embedding technology signifies genuine progress or superficial adoption that overlooks core pedagogical principles.

The Growing Pessimism: Is Technology Diluting Education’s Foundations?

Amid rapid technological implementation, a growing chorus of skepticism warns that the obsession with devices might be overshadowing fundamental educational values. Critics like A. Daniel Peck challenge the narrative that more technology equates to better learning. They argue that society’s fervor for digital tools, often labeled a “computer religion,” risks eclipsing essential skills such as literacy, critical thinking, and social-emotional development. Peck’s concerns echo historical fears that technological enthusiasm can lead to superficial educational reforms—frameworks that prioritize gadget proliferation over meaningful instruction. The persistent push for integrating computers and digital devices sometimes appears to be driven more by technological agendas than by evidence of effectiveness. While devices like whiteboards and internet access are celebrated for their potential, their implementation often reflects a superficial coverage rather than a transformation rooted in pedagogical efficacy. The fear that schools may chase after shiny new gadgets at the expense of meaningful learning underscores a deeper dilemma: technological adoption without thoughtful integration can dilute the very essence of education.

Technological Tools: Expensive Luxuries or Essential Educational Investments?

The economic dimension of educational technology reveals another layer of complexity. Interactive whiteboards, introduced in the late 1990s and quickly adopted by many schools, symbolize the ongoing struggle to balance costs versus benefits. By 2009, nearly a third of K-12 classrooms had embraced these “wall screens,” yet critics highlighted concerns about their high costs—ranging from $700 to over $4,500—and their actual pedagogical value. Many educators questioned whether investing in such large-scale hardware was the best use of limited resources, suggesting that funds might be better allocated toward laptops, tablets, or other portable devices more conducive to personalized learning. The same debate extends beyond hardware: internet connectivity, once a radical novelty, became ubiquitous in public schools by the early 2000s thanks to programs like the E-Rate initiative, which pooled billions to subsidize access. While access to the web has opened unprecedented avenues for information and interaction, critics contend that this technological zeal often overlooks foundational concerns—such as digital literacy, cybersecurity issues, and the risk of distraction. The costs and benefits of these investments continue to spark debate, revealing the tension between technological enthusiasm and fiscal prudence.

The Internet Boom: Revolutionary Innovation or Educational Distraction?

The advent of the internet marked a turning point in educational technology—an innovation eagerly embraced as a gateway to limitless information. Launched publicly in 1991, with Mosaic as the first graphical browser, it rapidly found its way into classrooms. By 2001, an impressive 87% of US public schools had internet access, bolstered by federal initiatives like the E-Rate. During this surge, however, critics voiced unease about overreliance on this disruptive communication medium. While the internet expanded the horizons of student learning, fears persisted that children could become victims of distraction, misinformation, or cyberbullying. Some skeptics argued that policymakers and educators were dazzled by the internet’s potential without fully grasping the pedagogical shifts necessary to harness its power responsibly. For all its promise, the rapid expansion of online access also highlighted disparities, privacy concerns, and the challenge of fostering digital literacy—questions that remain unresolved. Despite the optimism surrounding the internet’s role in education, critics have consistently warned against viewing it as an unqualified good, cautioning instead to focus on meaningful integration that enhances learning rather than simply providing access.

Is Technology Truly Transformative or Just a Passing Fad?

The trajectory of educational technology reveals a pattern of optimism followed by skepticism, as each new tool prompts hopes of revolutionary change but often results in superficial implementation. From the initial computer donations to the rise of whiteboards, and ultimately internet ubiquity, each stage of technological expansion has been accompanied by debates over efficacy and priorities. While on one hand, digital tools offer unprecedented opportunities for engagement, customization, and access, on the other hand, their indiscriminate adoption can distort educational goals and inflate budgets. Critical voices argue that true progress depends not on the mere presence of devices but on their thoughtful use—integrating them seamlessly into curricula and focusing on developing critical skills. The challenge for educators and policymakers is to navigate this complex landscape without falling prey to technological hype or financial extravagance. In the end, the question remains: will education adapt to technological change or simply chase after the next shiny gadget, risking superficial gains at the expense of deep learning?