Advancements in artificial intelligence, specifically in the field of natural language processing (NLP), have resulted in the development of sophisticated language models capable of generating human-like text. However, the complexity of certain inquiries often exposes their limitations, eliciting a need for systems that can adaptively collaborate to enhance the accuracy of responses. In a groundbreaking initiative from the Massachusetts Institute of Technology (MIT), researchers have opted to design a new algorithm, Co-LLM, which fosters a synergy between general-purpose language models and specialized counterparts. This innovative approach provides a platform for these models to work in unison to deliver more accurate and context-rich answers.

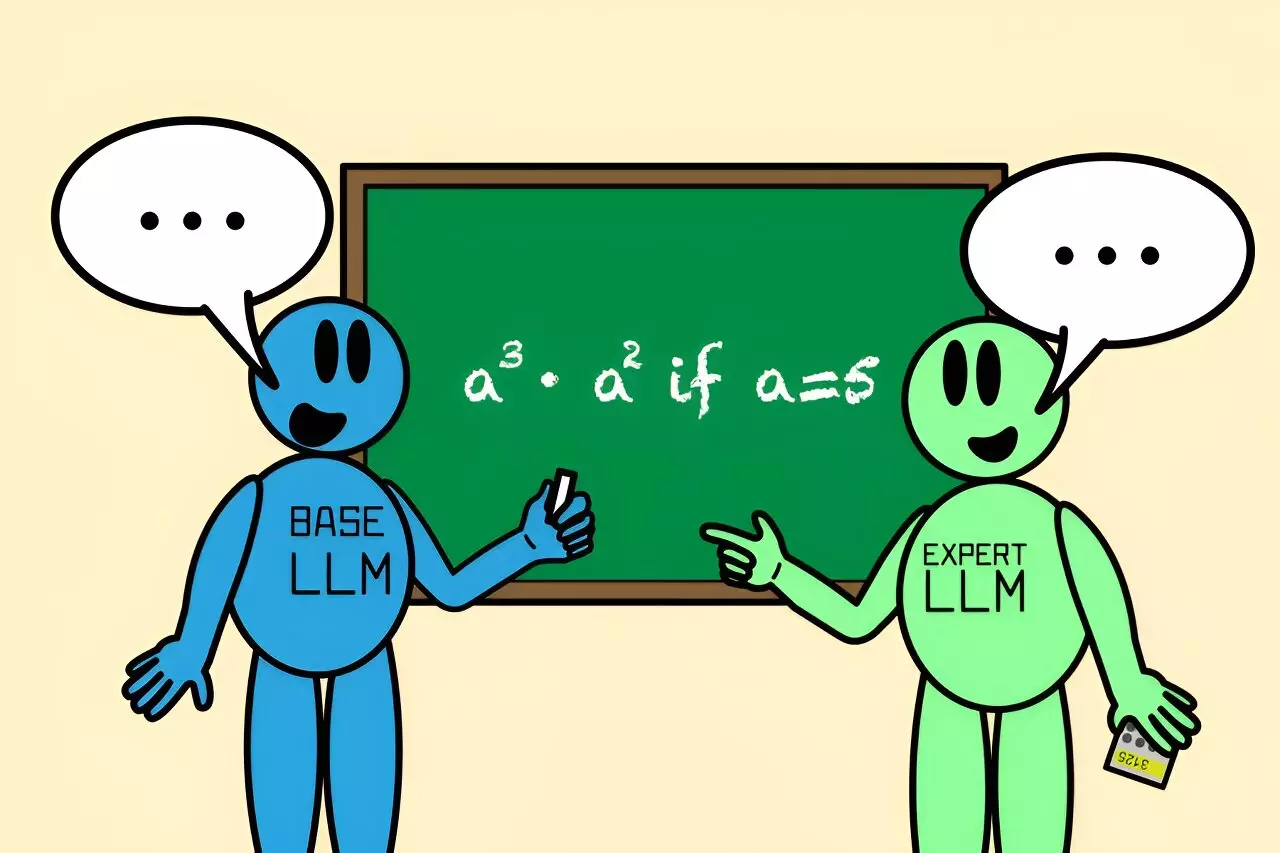

As the reliance on large language models grows, the necessity to refine their accuracy becomes paramount. Traditional models, while proficient in many areas, often lack deep expertise in specialized fields such as medicine or advanced mathematics. The challenge lies not only in the models’ ability to generate responses but in recognizing when additional expertise is warranted. In a scenario reminiscent of a student unsure of an answer who seeks the help of a knowledgeable peer, Co-LLM illustrates an evolved partnership between models—a simulated collaboration that mimics human teamwork.

Co-LLM operates by pairing a versatile, general-purpose model with a specialized model tailored to handle domain-specific queries. As the general-purpose model begins to formulate a response, Co-LLM employs a sophisticated mechanism—termed the “switch variable”—to evaluate the individual components of the answer. This switch essentially acts as a decision-maker, assessing which parts of the response may benefit from the specialized model’s insights.

For example, when tackling a question about extinct bear species, the base model might initially draft a reply. If the switch variable deems certain tokens—like dates or scientific names—as areas requiring deeper knowledge, it seamlessly draws input from the expert model. Such a process not only improves the accuracy of the response by infusing specialized knowledge but also streamlines the computational effort, as the expert model’s input is required only when necessary.

One striking feature of Co-LLM is its reliance on domain-specific training data to cultivate the relationship between the general-purpose and specialized models. The educational data used enables the base model to comprehend its counterpart’s expertise across various domains. This learning technique empowers Co-LLM to discern which areas are challenging for the base model and strategically incorporate specialized insights.

The practical implications of this approach are significant. In medical queries, for instance, the integration of a model that has been trained on extensive biomedical literature enhances the base model’s capacity to respond accurately. In numerical problems, Co-LLM showcases its prowess by illustrating how collaborative problem-solving can lead to more correct outcomes. In scenarios where a mere LLM may miscalculate results, the cooperative engagement with an expert model ensures that even intricate calculations yield the correct results, significantly reducing the instances of misinformation.

The research surrounding Co-LLM hints at exciting future enhancements. A prominent aspect under exploration involves implementing a mechanism for self-correction, allowing the system to backtrack and adjust responses based on the expertise of the model at any given moment. This aspect of adaptive learning mirrors the human cognitive process, where individuals re-evaluate information in light of new insights or corrections.

Furthermore, the potential for continuous updates to the expert models through the base model’s training presents a remarkable opportunity to ensure that the systems maintain their relevance and accuracy. In an age where information evolves rapidly, this capability could enable Co-LLM to remain attuned to the most current knowledge, thereby offering robust and accurate assistance in real-world applications.

The Co-LLM model represents a significant stride in the evolution of hybrid language models, fostering a collaborative environment that capitalizes on both general and specialized knowledge. By simulating human-like teamwork among language models, researchers have developed a system capable of enhancing performance, increasing efficiency, and decreasing the potential for errors. As the landscape of NLP continues to advance, the insights gained from Co-LLM and similar initiatives may well define the future of intelligent language systems, allowing them to cater to complex inquiries with unprecedented accuracy and insightfulness.