In the competitive landscape of AI integration within everyday applications, Google’s Gemini in Chrome presents a tantalizing glimpse of the future. For tech enthusiasts and casual users alike, Gemini offers an impressive capability — the ability to engage with an AI assistant that can “see” what you have on your browser screen. However, as with any pioneering technology, this feature also brings to light several nuances and limitations that users must navigate.

Emma Roth, who writes extensively on technology and consumer behavior, leads us into this exploration of a digital companion that claims to enhance our browsing experience. By allowing users to not only communicate with an AI but also gather contextual insights from their current webpage, Gemini redefines interaction with technology. Nevertheless, this innovation is still in its infancy, marked by an amalgamation of impressive potential and frustrating limitations.

The Mechanics of Gemini Integration

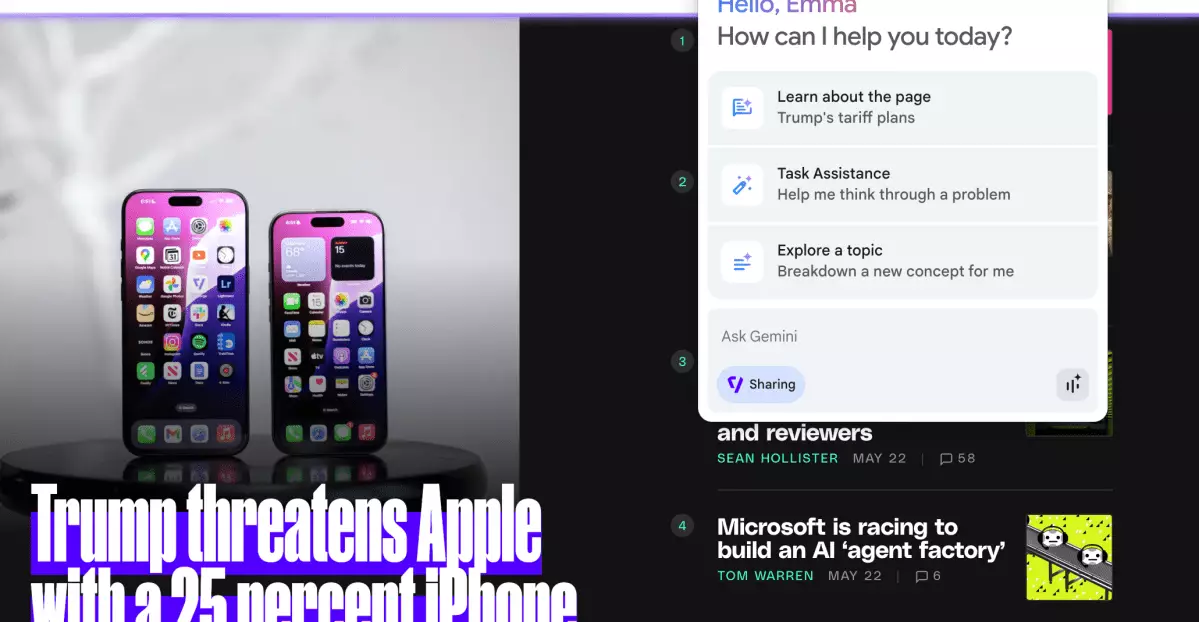

Accessing Gemini in Chrome is as simple as clicking a button in your browser’s top right corner. This seamless integration sounds functional, yet users quickly realize its capabilities are somewhat restricted. Gemini can summarize content and find relevant information from what you have on display, but it lacks the depth that would make it a true digital ally. For example, while it can summarize articles from sites like The Verge or keep tabs on YouTube videos, it falls short when attempting to process specific sections, such as comments or user interactions, unless those elements are directly visible on the screen.

Users who subscribe to the AI Pro or AI Ultra tiers, along with selecting versions of Chrome that allow for early access, can delve into these capabilities, yet many may find themselves craving an experience that feels more seamless and intuitive. Roth notes a key observation during her testing: users must constantly adjust their browser interactions to maximize Gemini’s efficacy. For an AI designed to simplify tasks, this requirement feels counterproductive.

Voice Activation and Real-time Feedback

One particularly interesting feature of Gemini is its “Live” mode, which allows users to vocalize questions instead of typing them. This can lead to a more engaging interaction and can enhance the experience while multitasking with other media, such as YouTube. Roth found this functionality beneficial when trying to identify tools and components during demonstration videos on the platform. Gemini’s ability to respond and provide immediate feedback can indeed shift how we consume online content.

Nonetheless, this impressive feature is not immune to errors. Being unable to access real-time information leaves users wishing for more depth and accuracy in responses. For instance, Roth’s experience with Gemini faltered when inquiries about specific products or dynamic content yielded vague or unhelpful replies that border on frustrating. Users might find the AI’s inability to provide immediate results in a fast-paced digital landscape to be one of the major shortcomings hindering Gemini’s broader relevance.

Building a User-Friendly Interface

One glaring issue that Roth highlights is the practicality of interaction concerning screen space and user convenience. The responses Gemini produces can often be lengthy for a pop-up window, leading to an unwieldy experience that contradicts one of AI’s primary selling points: efficiency. This is particularly concerning for users on smaller displays, such as laptops, where screen real estate is already limited.

Even though users have the option to extend the dialogue box for more room, the interface could certainly be optimized for quicker, more digestible responses. The repetitive follow-up inquiries that Gemini poses can also detract from user satisfaction, leading to a convoluted user experience when simplicity should be paramount.

The Road Ahead: Towards an “Agentic” AI

While this early iteration of Gemini certainly has its challenges, it also presents a fascinating opportunity for Google to refine and enhance the integration. The ambition is clear: Google aims to breathe life into AI applications by making them more “agentic,” capable of performing various tasks autonomously. The concept of Project Mariner’s “Agent Mode” is particularly promising, indicating that future upgrades may move Gemini closer to a digital AI capable of managing complex interactions and tasks on behalf of users.

In the grand scheme of things, the current implementation of Gemini in Chrome could lay the groundwork for future advancements. The potential to streamline processes, generate swift responses, and become an essential component of the browsing experience is tantalizing. However, for now, the challenges present a hurdle that Google must overcome before Gemini can truly flourish as a leading AI assistive technology. Users remain eager to see whether these enhancements will manifest, transforming how we interact online and making Gemini the invaluable tool it aspires to be.