In an era where digital landscapes are as influential as physical environments, social media platforms wield tremendous power over young minds. Meta’s recent initiatives to enhance teen protections reveal a nuanced understanding of this influence, yet they also expose the challenges inherent in safeguarding impressionable users. These updates symbolize a commendable move towards prioritizing mental and emotional safety, but they also invite scrutiny regarding their true effectiveness and underlying motives.

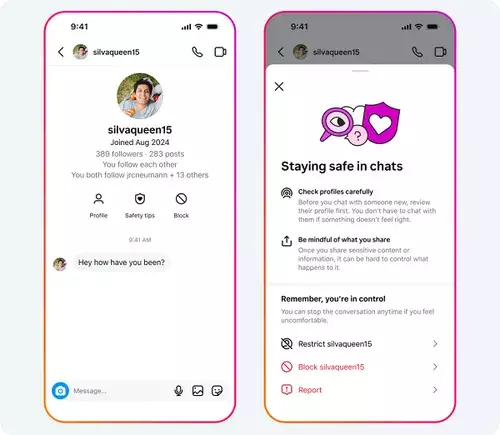

The introduction of “Safety Tips” prompts directly within Instagram chats exemplifies the platform’s shift from reactive to proactive safety measures. By offering immediate access to guidance on spotting scams or interacting securely, Meta attempts to empower teens with knowledge rather than just tools. This approach recognizes that awareness is a critical front in the battle against digital harassment and exploitation. However, the real question remains: will such prompts resonate sufficiently with young users, or will they become background noise in an already noisy digital environment?

Adding quick-acting features such as one-tap block and report options simplifies the process of disengaging from harmful accounts. This streamlining of safety protocols is a pragmatic move, reducing barriers to protective action. The inclusion of account creation information adds context, aiming to foster informed decisions. Nevertheless, the core challenge persists—aggressive predators and scam artists continually evolve their tactics. Can these tools alone withstand the sophisticated manipulation and psychological games played online?

Addressing the Darker Realities of Adults and Teen Interactions

Meta’s efforts extend beyond tools aimed at teens. Recognizing the omnipresent threat posed by maladjusted adults targeting minors, the platform is actively filtering out suspicious accounts from recommendations. That nearly 135,000 accounts linked to inappropriate activities involving under-13s have been removed underscores the severity of the issue. Yet, despite these numbers, the scale of illicit activity remains staggering, revealing the ongoing struggle to detect and dismantle exploitative networks.

What is particularly troubling is the prevalence of adult-managed accounts that cultivate sexualized environments for minors. The removal of nearly half a million such accounts just this year signals a systemic issue rooted in the platform’s architecture—an ecosystem where predators exploit social algorithms to lure vulnerable users. Meta’s stance on this reflects a moral urgency; however, it also highlights the significant gap between policy and persistent realities. How effective can these removal efforts be when new accounts spawn almost as quickly as they are deleted?

Striving for a Safer Digital Ecosystem

Despite these hurdles, Meta’s deployment of protective features for teen users is noteworthy. Tools that limit who can message younger users, along with nudity detection and location privacy measures, demonstrate a layered approach to safeguarding. The statistics—such as 99% of users activating nudity filters and the notable reduction of explicit content—indicate progress. Still, these metrics reveal only part of the picture; many teens remain exposed despite technological safeguards, often due to social complacency or lack of awareness.

Enhancing awareness about these tools is crucial. Meta’s initiatives to inform users about safety features are a step forward, but they cannot replace the need for cultural change within online spaces. Encouragingly, the platform’s support for raising the minimum age for access aligns with a broader societal push for even greater restrictions—possibly extending the age limit to 16 or beyond. However, such measures seem as much strategic as moral, prioritizing corporate reputation and user retention as much as protection.

Meta’s ongoing efforts highlight a balancing act: protecting vulnerable youths while navigating the commercial incentives to keep users engaged. As social media policies evolve, there is a pressing need to challenge and reimagine the ethical foundations underpinning digital engagement. Ultimately, genuine protection will require not just technological solutions but also societal shifts that recognize and prioritize mental health, consent, and safe online behaviors.

—

Meta’s recent safety initiatives indicate a platform increasingly aware of its responsibility, but they also serve as a mirror reflecting the persistent and evolving threats in the online realm. Whether these measures will lead to meaningful change is doubtful without a deeper cultural transformation that puts user safety above growth metrics. Protecting teen users is indeed critical—yet it demands more than just added buttons and prompts; it requires a fundamental rethinking of how social media is designed, marketed, and governed.