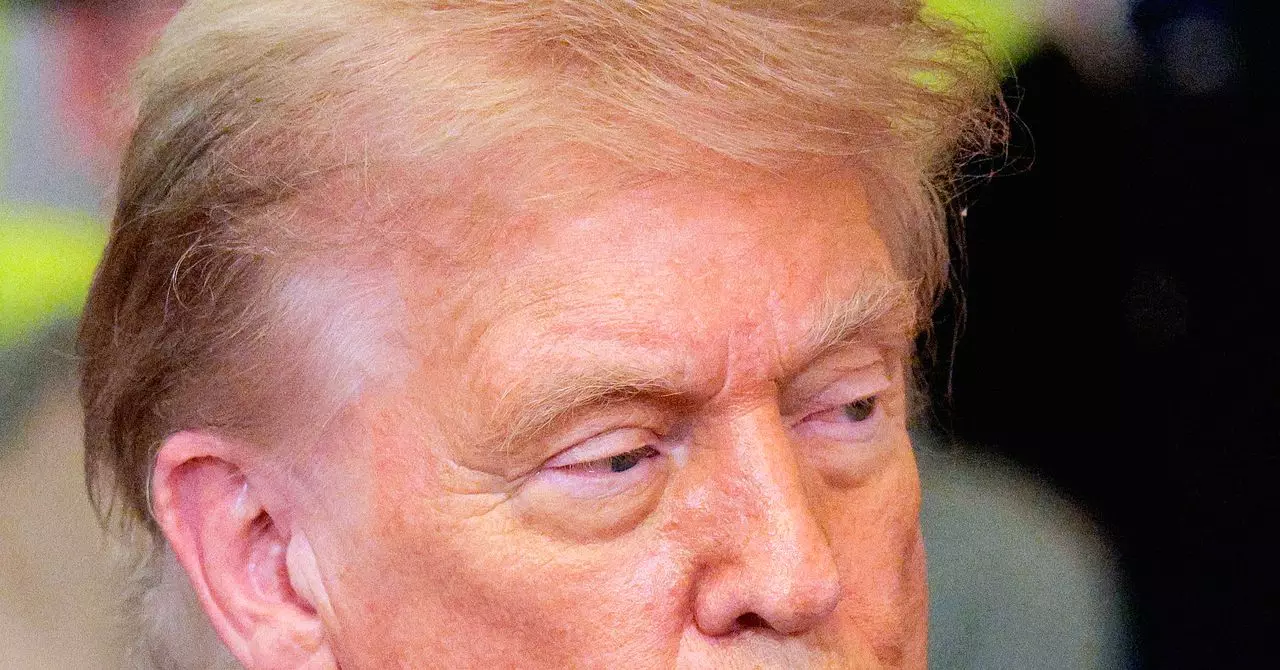

In the whirlwind of legislative efforts to tackle the rapid growth of artificial intelligence technologies, Congress has found itself embroiled in a heated debate over the proposed AI moratorium. Initially, President Donald Trump’s ambitious “Big Beautiful Bill” incorporated a controversial clause—a 10-year halt on state-level AI regulations. This move, pushed by influential figures like David Sacks, aimed to create regulatory uniformity but soon clashed head-on with a spectrum of critics ranging from state attorneys general to outspoken politicians such as Representative Marjorie Taylor Greene. The uproar surrounding this hardcore moratorium underscores the complexity and urgency of balancing innovation with public protection in an era where AI permeates our daily lives.

From a Decade to Five Years: The Moratorium’s Slimmed-Down Version

Facing widespread backlash, senators Marsha Blackburn and Ted Cruz introduced a revised proposal truncating the AI moratorium to five years and including several exemptions for certain state laws. This attempt at compromise sought to mollify those wary of a blanket freeze on AI governance. But the trimmed-down scope failed to appease either side: critics dismissed it as a “get-out-of-jail free card” for Big Tech, fearing it would continue unchecked exploitation of vulnerable groups such as children, artists, and conservative voices online.

Interestingly, Senator Blackburn’s position has swung dramatically within a short timeframe. Initially opposing the moratorium entirely, she then collaborated on this reduced version before renouncing it. This vacillation, while politically understandable, reveals the intricate pressures surrounding AI policy-making—balancing industry interests, state sovereignty, and constituent protections is no simple task. Blackburn’s focus on defending Tennessee’s music industry, which recently enacted laws to combat AI-generated deepfakes of musical artists, adds another layer of nuance to the battle over intellectual property versus innovation freedom.

The Perils of Carve-Outs and Conditional Exemptions

The revised moratorium’s carve-outs aimed at preserving certain state laws—such as those protecting child safety, preventing deceptive practices, and safeguarding individuals’ likeness and identity—might seem like a victory for advocates of targeted regulation. However, these exemptions come with a critical caveat: the laws cannot impose “undue or disproportionate burden” on AI systems or automated decision-making processes. This ambiguous and broad qualifier raises alarm bells for legal experts and advocacy groups.

The phrase effectively grants AI companies a defensive shield against many forms of state regulation, potentially hampering robust enforcement of protections for the public. Senator Maria Cantwell’s sharp criticism of this language as enabling “a brand-new shield” highlights how this loophole could be exploited to delay or dilute meaningful rules. The risk here extends beyond tech giants’ immediate interests to core societal concerns such as child online safety, privacy rights, and editorial fairness.

A Fractured Coalition: Uniting Fence-Sitters and Fierce Opponents

What strikes me about this saga is not only the depth of disagreement but also the diversity of opponents to the moratorium—ranging from unions wary of federal overreach to political figures like Steve Bannon who view the pause as a Trojan horse for Big Tech agendas. This fractured coalition reveals that concerns over uncontrolled AI development and insufficient accountability transcend traditional ideological boundaries. It’s a rare convergence that signals how seriously many stakeholders regard AI’s potential harms.

Yet despite this wide array of voices, lawmakers seem paralyzed, offering half-measures that satisfy no one. The moratorium’s revisions, rather than clarifying or strengthening protections, introduce new ambiguities that risk diluting state autonomy and emboldening tech monopolies. The failure to enact a comprehensive federal framework that harmonizes safety, privacy, and innovation is glaring and troubling.

Why Bold Legislation Is the Only Way Forward

At the heart of this debate is an uncomfortable truth: vague compromises and regulatory pauses won’t protect citizens from AI’s rapid and sometimes harmful evolution. The attempt to delay state innovation in governance for years is tantamount to surrendering public oversight to private corporations during one of the most critical phases of AI development. This is especially perilous given AI’s entanglement in social media algorithms, misinformation dynamics, and content moderation—all areas where vulnerable populations bear the brunt of harm.

Legislators must reject the temptation to concede to industry-friendly loopholes and instead champion comprehensive laws like the Kids Online Safety Act and robust online privacy frameworks at the federal level. Such regulations should empower states rather than hinder them and provide clear guardrails that hold AI technologies accountable without stifling progress. Anything less risks handing Big Tech the very “get-out-of-jail free card” critics warn about, embedding systemic vulnerabilities into society’s digital future.